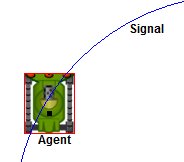

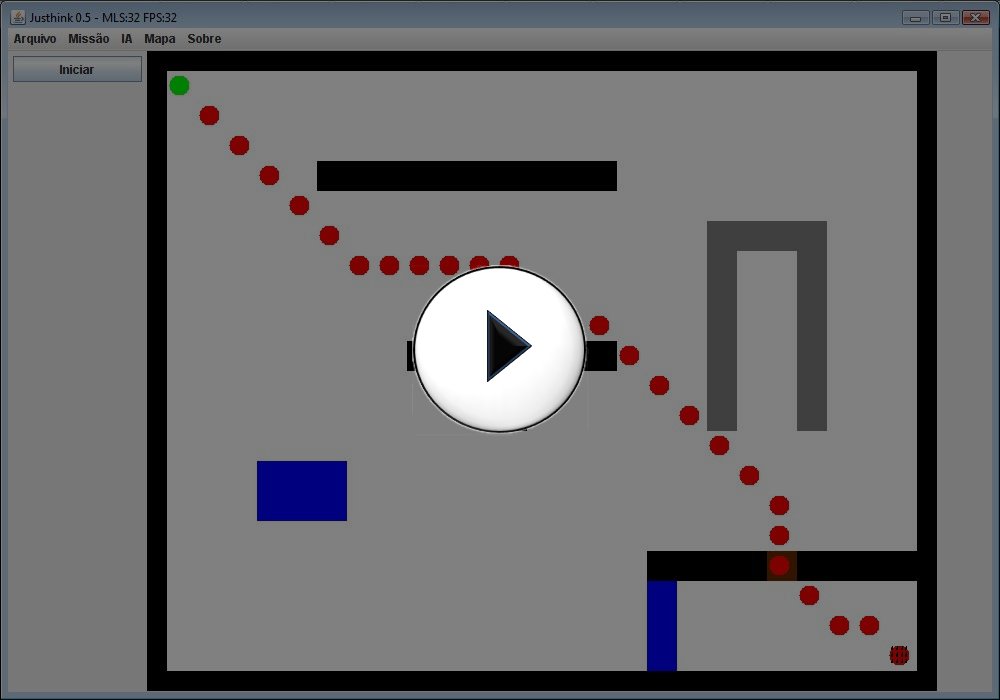

Figure 1. An intelligent agent detecting an object using the sensor at the right side. The points p1 and p2 demonstrates the line projected by the sensor. An object is intersected by this line. D is the distance between the agent and the detected object. |